The History of the Modern Graphics Processor

The evolution of the modern graphics processor begins with the introduction of the showtime 3D add together-in cards in 1995, followed by the widespread adoption of the 32-chip operating systems and the affordable personal computer.

The graphics manufacture that existed earlier that largely consisted of a more prosaic 2nd, non-PC architecture, with graphics boards better known by their chip's alphanumeric naming conventions and their huge toll tags. 3D gaming and virtualization PC graphics eventually coalesced from sources equally various every bit arcade and panel gaming, military, robotics and infinite simulators, as well as medical imaging.

The early days of 3D consumer graphics were a Wild West of competing ideas. From how to implement the hardware, to the use of dissimilar rendering techniques and their awarding and data interfaces, equally well as the persistent naming hyperbole. The early graphics systems featured a stock-still function pipeline (FFP), and an architecture following a very rigid processing path utilizing nigh every bit many graphics APIs as there were 3D fleck makers.

While 3D graphics turned a fairly slow PC industry into a light and magic testify, they owe their being to generations of innovative endeavor. This is the first installment on a serial of v manufactures that in chronological order, take an extensive look at the history of the GPU. Going from the early on days of 3D consumer graphics, to the 3Dfx Voodoo game-changer, the industry's consolidation at the plough of the century, and today's modernistic GPGPU.

1976 - 1995: The Early Days of 3D Consumer Graphics

The first truthful 3D graphics started with early brandish controllers, known as video shifters and video address generators. They acted every bit a pass-through between the main processor and the display. The incoming information stream was converted into series bitmapped video output such as luminance, color, besides every bit vertical and horizontal composite sync, which kept the line of pixels in a display generation and synchronized each successive line forth with the blanking interval (the fourth dimension between ending one browse line and starting the next).

A flurry of designs arrived in the latter half of the 1970s, laying the foundation for 3D graphics as we know them. RCA's "Pixie" video bit (CDP1861) in 1976, for case, was capable of outputting a NTSC compatible video signal at 62x128 resolution, or 64x32 for the ill-fated RCA Studio II console.

The video chip was speedily followed a year subsequently by the Television Interface Adapter (TIA) 1A, which was integrated into the Atari 2600 for generating the screen display, sound effects, and reading input controllers. Development of the TIA was led by Jay Miner, who also led the blueprint of the custom fries for the Commodore Amiga computer after on.

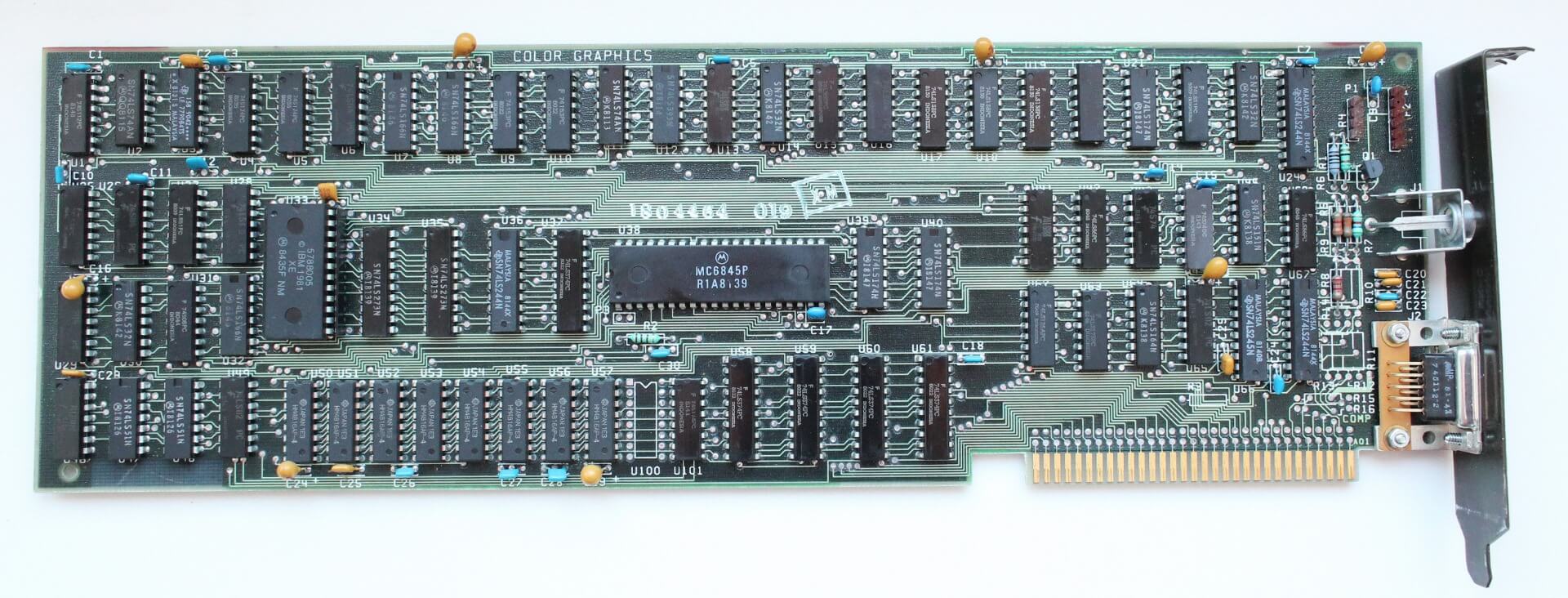

In 1978, Motorola unveiled the MC6845 video address generator. This became the basis for the IBM PC's Monochrome and Colour Brandish Adapter (MDA/CDA) cards of 1981, and provided the aforementioned functionality for the Apple tree II. Motorola added the MC6847 video display generator subsequently the aforementioned year, which made its mode into a number of first generation personal computers, including the Tandy TRS-fourscore.

A like solution from Commodore's MOS Tech subsidiary, the VIC, provided graphics output for 1980-83 vintage Commodore home computers.

In November the following year, LSI's Antic (Alphanumeric Television Interface Controller) and CTIA/GTIA co-processor (Color or Graphics Television Interface Adaptor), debuted in the Atari 400. ANTIC processed 2D brandish instructions using direct memory access (DMA). Like near video co-processors, it could generate playfield graphics (background, title screens, scoring brandish), while the CTIA generated colors and moveable objects. Yamaha and Texas Instruments supplied similar IC's to a multifariousness of early on domicile computer vendors.

The next steps in the graphics evolution were primarily in the professional person fields.

Intel used their 82720 graphics chip as the footing for the $yard iSBX 275 Video Graphics Controller Multimode Board. Information technology was capable of displaying eight color data at a resolution of 256x256 (or monochrome at 512x512). Its 32KB of display retentivity was sufficient to draw lines, arcs, circles, rectangles and character bitmaps. The chip also had provision for zooming, screen partitioning and scrolling.

SGI apace followed up with their IRIS Graphics for workstations -- a GR1.ten graphics board with provision for separate add-in (daughter) boards for color options, geometry, Z-buffer and Overlay/Underlay.

Intel's $1000 iSBX 275 Video Graphics Controller Multimode Board was capable of displaying eight color information at a resolution of 256x256 (or monochrome at 512x512).

Industrial and military 3D virtualization was relatively well developed at the time. IBM, Full general Electric and Martin Marietta (who were to buy GE's aerospace division in 1992), along with a slew of military machine contractors, technology institutes and NASA ran various projects that required the technology for armed services and space simulations. The Navy also adult a flight simulator using 3D virtualization from MIT'due south Cyclone computer in 1951.

Besides defence force contractors there were companies that straddled armed services markets with professional graphics.

Evans & Sutherland – who were to provide professional graphics card series such equally the Liberty and REALimage – as well provided graphics for the CT5 flight simulator, a $20 million parcel driven by a DEC PDP-11 mainframe. Ivan Sutherland, the company's co-founder, developed a estimator program in 1961 chosen Sketchpad, which allowed cartoon geometric shapes and displaying on a CRT in existent-time using a light pen.

This was the progenitor of the modern Graphic User Interface (GUI).

In the less esoteric field of personal computing, Chips and Technologies' 82C43x serial of EGA (Extended Graphics Adapter), provided much needed competition to IBM's adapters, and could be plant installed in many PC/AT clones effectually 1985. The year was noteworthy for the Commodore Amiga as well, which shipped with the OCS chipset. The chipset comprised of three chief component chips -- Agnus, Denise, and Paula -- which allowed a sure corporeality of graphics and audio calculation to exist non-CPU dependent.

In Baronial of 1985, three Hong Kong immigrants, Kwok Yuan Ho, Lee Lau and Benny Lau, formed Array Technology Inc in Canada. By the end of the twelvemonth, the proper name had changed to ATI Technologies Inc.

ATI got their outset product out the following twelvemonth, the OEM Color Emulation Card. It was used for outputting monochrome green, amber or white phosphor text confronting a black groundwork to a TTL monitor via a 9-pin DE-9 connector. The card came equipped with a minimum of 16KB of retention and was responsible for a big percentage of ATI's CAD$10 million in sales in the company'due south first year of functioning. This was largely done through a contract that supplied around 7000 chips a week to Commodore Computers.

ATI'southward Colour Emulation Card came with a minimum 16KB of memory and was responsible for a large part of the visitor'southward CAD$10 million in sales the starting time year of operation.

The advent of color monitors and the lack of a standard amid the assortment of competitors ultimately led to the formation of the Video Electronics Standards Association (VESA), of which ATI was a founding fellow member, along with NEC and 6 other graphics adapter manufacturers.

In 1987 ATI added the Graphics Solution Plus series to its product line for OEM's, which used IBM's PC/XT ISA eight-bit passenger vehicle for Intel 8086/8088 based IBM PC's. The chip supported MDA, CGA and EGA graphics modes via dip switches. It was basically a clone of the Plantronics Colorplus board, but with room for 64kb of memory. Paradise Systems' PEGA1, 1a, and 2a (256kB) released in 1987 were Plantronics clones as well.

The EGA Wonder series i to 4 arrived in March for $399, featuring 256KB of DRAM likewise as compatibility with CGA, EGA and MDA emulation with up to 640x350 and 16 colors. Extended EGA was available for the series two,3 and 4.

Filling out the high end was the EGA Wonder 800 with 16-color VGA emulation and 800x600 resolution back up, and the VGA Improved Performance (VIP) carte du jour, which was basically an EGA Wonder with a digital-to-analog (DAC) added to provide limited VGA compatibility. The latter price $449 plus $99 for the Compaq expansion module.

ATI was far from being alone riding the wave of consumer appetite for personal computing.

Many new companies and products arrived that year.. Amidst them were Trident, Sis, Tamerack, Realtek, Oak Technology, LSI's Yard-2 Inc., Hualon, Cornerstone Imaging and Winbond -- all formed in 1986-87. Meanwhile, companies such every bit AMD, Western Digital/Paradise Systems, Intergraph, Cirrus Logic, Texas Instruments, Gemini and Genoa, would produce their first graphics products during this timeframe.

ATI's Wonder series continued to gain prodigious updates over the next few years.

In 1988, the Minor Wonder Graphics Solution with game controller port and blended out options became available (for CGA and MDA emulation), equally well as the EGA Wonder 480 and 800+ with Extended EGA and 16-fleck VGA back up, and too the VGA Wonder and Wonder 16 with added VGA and SVGA support.

A Wonder xvi was equipped with 256KB of retentiveness retailed for $499, while a 512KB variant cost $699.

An updated VGA Wonder/Wonder 16 series arrived in 1989, including the reduced cost VGA Edge 16 (Wonder 1024 series). New features included a bus-Mouse port and support for the VESA Feature Connector. This was a aureate-fingered connector like to a shortened data coach slot connector, and it linked via a ribbon cable to another video controller to featherbed a congested data bus.

The Wonder series updates continued to movement rapidly in 1991. The Wonder Xl card added VESA 32K color compatibility and a Sierra RAMDAC, which boosted maximum display resolution to 640x480 @ 72Hz or 800x600 @ 60Hz. Prices ranged through $249 (256KB), $349 (512KB), and $399 for the 1MB RAM option. A reduced cost version called the VGA Charger, based on the previous year's Bones-16, was also made available.

The Mach series launched with the Mach8 in May of that year. It sold as either a chip or board that allowed, via a programming interface (AI), the offloading of limited second drawing operations such every bit line-draw, colour-fill and bitmap combination (Bit BLIT).ATI added a variation of the Wonder 40 that incorporated a Creative Audio Equalizer i.5 chip on an extended PCB. Known as the VGA Stereo-F/10, it was capable of simulating stereo from Sound Blaster mono files at something approximating FM radio quality.

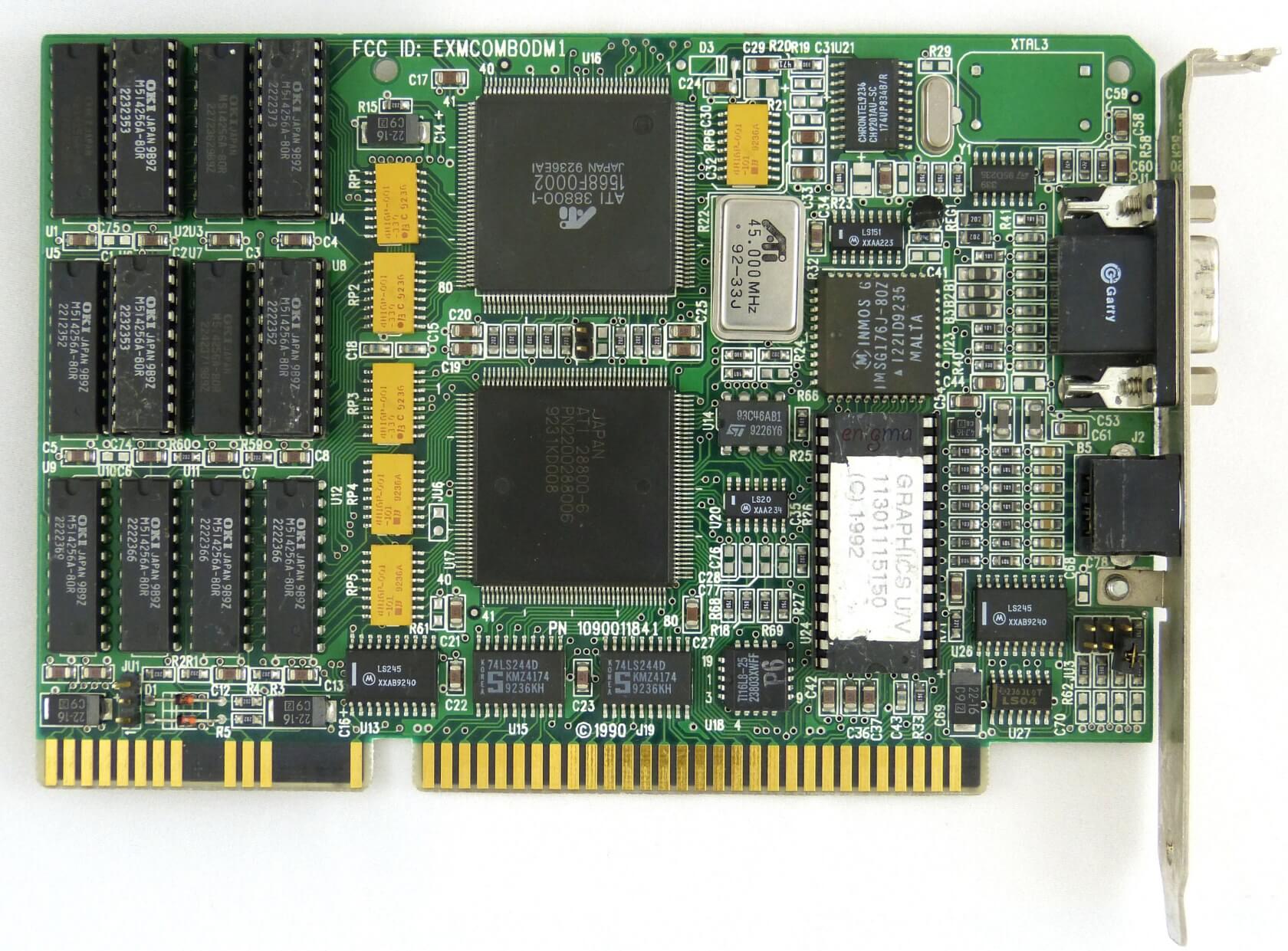

Graphics boards such as the ATI VGAWonder GT, offered a second + 3D option, combining the Mach8 with the graphics core (28800-2) of the VGA Wonder+ for its 3D duties. The Wonder and Mach8 pushed ATI through the CAD$100 million sales milestone for the year, largely on the back of Windows three.0's adoption and the increased 2d workloads that could exist employed with it.

S3 Graphics was formed in early 1989 and produced its kickoff 2d accelerator chip and a graphics card eighteen months later, the S3 911 (or 86C911). Primal specs for the latter included 1MB of VRAM and 16-bit colour support.

The S3 911 was superseded by the 924 that aforementioned year -- it was basically a revised 911 with 24-bit color -- and once again updated the following year with the 928 which added 32-flake colour, and the 801 and 805 accelerators. The 801 used an ISA interface, while the 805 used VLB. Betwixt the 911's introduction and the appearance of the 3D accelerator, the market was flooded with 2d GUI designs based on S3's original -- notably from Tseng labs, Cirrus Logic, Trident, IIT, ATI'due south Mach32 and Matrox'south MAGIC RGB.

In January 1992, Silicon Graphics Inc (SGI) released OpenGL one.0, a multi-platform vendor agnostic application programming interface (API) for both 2d and 3D graphics.

Microsoft was developing a rival API of their own called Direct3D and didn't exactly break a sweat making certain OpenGL ran every bit well as it could under Windows.

OpenGL evolved from SGI's proprietary API, called the IRIS GL (Integrated Raster Imaging System Graphical Library). It was an initiative to go along non-graphical functionality from IRIS, and allow the API to run on non-SGI systems, as rival vendors were starting to loom on the horizon with their own proprietary APIs.

Initially, OpenGL was aimed at the professional person UNIX based markets, but with developer-friendly support for extension implementation information technology was quickly adopted for 3D gaming.

Microsoft was developing a rival API of their own called Direct3D and didn't exactly pause a sweat making certain OpenGL ran too as information technology could under the new Windows operating systems.

Things came to a head a few years later when John Carmack of id Software, whose previously released Doom had revolutionised PC gaming, ported Convulse to utilise OpenGL on Windows and openly criticised Direct3D.

Microsoft's intransigence increased as they denied licensing of OpenGL's Mini-Customer Commuter (MCD) on Windows 95, which would permit vendors to choose which features would have access to hardware acceleration. SGI replied by developing the Installable Client Commuter (ICD), which not only provided the same ability, but did and then even improve since MCD covered rasterization just and ICD added lighting and transform functionality (T&L).

During the rise of OpenGL, which initially gained traction in the workstation arena, Microsoft was busy eyeing the emerging gaming market place with designs on their ain proprietary API. They acquired RenderMorphics in February 1995, whose Reality Lab API was gaining traction with developers and became the cadre for Direct3D.

At about the same fourth dimension, 3dfx's Brian Claw was writing the Glide API that was to go the dominant API for gaming. This was in part due to Microsoft's involvement with the Talisman project (a tile based rendering ecosystem), which diluted the resources intended for DirectX.

Every bit D3D became widely available on the dorsum of Windows adoption, proprietary APIs such as S3d (S3), Matrox Uncomplicated Interface, Artistic Graphics Library, C Interface (ATI), SGL (PowerVR), NVLIB (Nvidia), RRedline (Rendition) and Glide, began to lose favor with developers.

It didn't assistance matters that some of these proprietary APIs were allied with lath manufacturers nether increasing pressure to add to a rapidly expanding feature list. This included higher screen resolutions, increased colour depth (from xvi-bit to 24 then 32), and image quality enhancements such equally anti-aliasing. All of these features called for increased bandwidth, graphics efficiency and faster production cycles.

By 1993, market place volatility had already forced a number of graphics companies to withdraw from the business, or to be captivated by competitors.

The year 1993 ushered in a flurry of new graphics competitors, most notably Nvidia, founded in Jan of that year by Jen-Hsun Huang, Curtis Priem and Chris Malachowsky. Huang was previously the Managing director of Coreware at LSI while Priem and Malachowsky both came from Sun Microsystems where they had previously adult the SunSPARC-based GX graphics architecture.

Fellow newcomers Dynamic Pictures, ARK Logic, and Rendition joined Nvidia soon thereafter.

Marketplace volatility had already forced a number of graphics companies to withdraw from the business, or to be captivated by competitors. Amid them were Tamerack, Gemini Engineering science, Genoa Systems, Hualon, Headland Technology (bought past SPEA), Acer, Motorola and Acumos (bought by Cirrus Logic).

One company that was moving from forcefulness to force even so was ATI.

Every bit a forerunner of the All-In-Wonder series, late November saw the announcement of ATI's 68890 PC Television receiver decoder chip which debuted inside the Video-Information technology! card. The flake was able to capture video at 320x240 @ xv fps, or 160x120 @ 30 fps, as well as compress/decompress in real fourth dimension thanks to the onboard Intel i750PD VCP (Video Compression Processor). It was also able to communicate with the graphics board via the data motorbus, thus negating the need for dongles or ports and ribbon cables.

The Video-It! retailed for $399, while a lesser featured model named Video-Basic completed the line-up.

Five months later, in March, ATI late introduced a 64-bit accelerator; the Mach64.

The financial year had not been kind to ATI with a CAD$2.seven million loss as it slipped in the market amidst strong competition. Rival boards included the S3 Vision 968, which was picked upwards by many board vendors, and the Trio64 which picked upwards OEM contracts from Dell (Dimension XPS), Compaq (Presario 7170/7180), AT&T (Globalyst),HP (Vectra VE 4), and Dec (Venturis/Celebris).

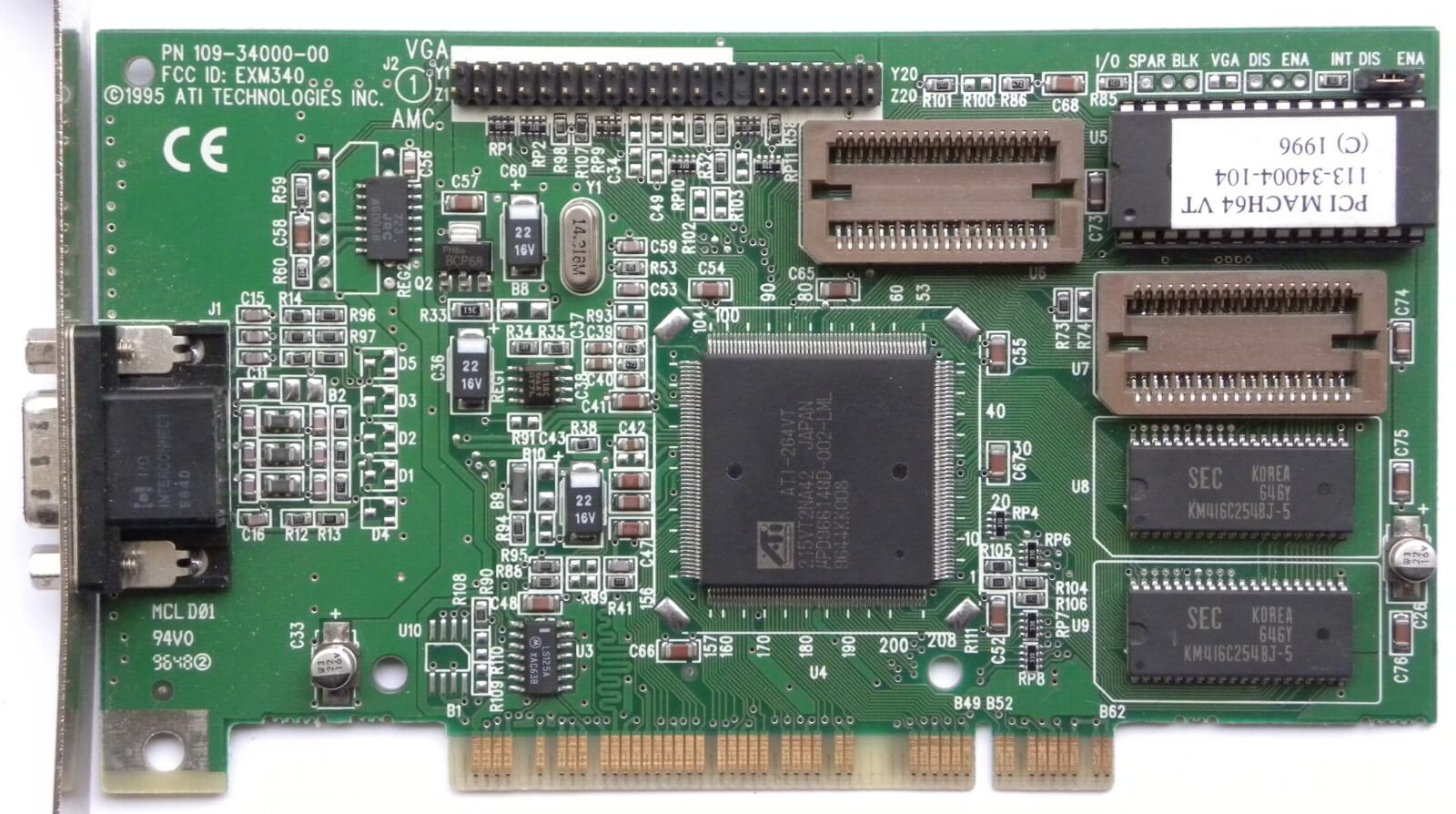

Released in 1995, the Mach64 notched a number of notable firsts. It became the showtime graphics adapter to be available for PC and Mac computers in the form of the Xclaim ($450 and $650 depending on onboard memory), and, along with S3's Trio, offered full-movement video playback acceleration.

The Mach64 besides ushered in ATI'south first pro graphics cards, the 3D Pro Turbo and 3D Pro Turbo+PC2TV, priced at a cool $599 for the 2MB option and $899 for the 4MB.

The following calendar month saw a engineering science start-up called 3DLabs ascension onto the scene, born when DuPont'due south Pixel graphics division bought the subsidiary from its parent visitor, forth with the GLINT 300SX processor capable of OpenGL rendering, fragment processing and rasterization. Due to their high toll the company's cards were initially aimed at the professional market. The Fujitsu Sapphire2SX 4MB retailed for $1600-$2000, while an 8MB ELSA GLoria viii was $2600-$2850. The 300SX, however, was intended for the gaming market.

S3 seemed to be everywhere at that time. The high-terminate OEM marked was dominated by the company'due south Trio64 chipsets that integrated DAC, a graphics controller, and clock synthesiser into a single scrap.

The Gaming GLINT 300SX of 1995 featured a much-reduced 2MB of memory. It used 1MB for textures and Z-buffer and the other for frame buffer, only came with an selection to increase the VRAM for Direct3D compatibility for another $50 over the $349 base price. The menu failed to make headway in an already crowded marketplace, but 3DLabs was already working on a successor in the Permedia series.

S3 seemed to exist everywhere at that fourth dimension. The high-stop OEM marked was dominated by the company's Trio64 chipsets that integrated DAC, a graphics controller, and clock synthesiser into a single flake. They also utilized a unified frame buffer and supported hardware video overlay (a dedicated portion of graphics memory for rendering video as the application requires). The Trio64 and its 32-flake retentivity double-decker sibling, the Trio32, were available as OEM units and standalone cards from vendors such as Diamond, ELSA, Sparkle, STB, Orchid, Hercules and Number Nine. Diamond Multimedia'due south prices ranged from $169 for a ViRGE based carte du jour, to $569 for a Trio64+ based Diamond Stealth64 Video with 4MB of VRAM.

The mainstream stop of the market also included offerings from Trident, a long fourth dimension OEM supplier of no-frills 2nd graphics adapters who had recently added the 9680 fleck to its line-upwards. The fleck boasted most of the features of the Trio64 and the boards were more often than not priced around the $170-200 mark. They offered adequate 3D performance in that bracket, with skilful video playback capability.

Other newcomers in the mainstream marketplace included Weitek's Power Histrion 9130, and Brotherhood Semiconductor's ProMotion 6410 (usually seen every bit the Alaris Matinee or FIS's OptiViewPro). Both offered first-class scaling with CPU speed, while the latter combined the strong scaling engine with antiblocking circuitry to obtain smooth video playback, which was much better than in previous chips such as the ATI Mach64, Matrox MGA 2064W and S3 Vision968.

Nvidia launched their first graphics chip, the NV1, in May, and became the first commercial graphics processor capable of 3D rendering, video acceleration, and integrated GUI dispatch.

They partnered with ST Microelectronic to produce the chip on their 500nm process and the latter too promoted the STG2000 version of the chip. Although information technology was not a huge success, it did represent the offset fiscal return for the company. Unfortunately for Nvidia, but as the get-go vendor boards started aircraft (notably the Diamond Border 3D) in September, Microsoft finalized and released DirectX 1.0.

The D3D graphics API confirmed that information technology relied upon rendering triangular polygons, where the NV1 used quad texture mapping. Limited D3D compatibility was added via driver to wrap triangles as quadratic surfaces, only a lack of games tailored for the NV1doomed the card equally a jack of all trades, master of none.

Most of the games were ported from the Sega Saturn. A 4MB NV1 with integrated Saturn ports (two per expansion bracket connected to the card via ribbon cable), retailed for around $450 in September 1995.

Microsoft's late changes and launch of the DirectX SDK left lath manufacturers unable to direct access hardware for digital video playback. This meant that virtually all discrete graphics cards had functionality issues in Windows 95. Drivers under Win 3.1 from a variety of companies were by and large faultless by contrast.

The first public demonstration of it came at the E3 video game conference held in Los Angeles in May the post-obit year. The carte du jour itself became available a calendar month later. The 3D Rage merged the 2d core of the Mach64 with 3D capability.ATI announced their first 3D accelerator chip, the 3D Rage (also known as the Mach 64 GT), in November 1995.

Late revisions to the DirectX specification meant that the 3D Rage had compatibility problems with many games that used the API -- mainly the lack of depth buffering. With an on-board 2MB EDO RAM frame buffer, 3D modality was limited to 640x480x16-chip or 400x300x32-fleck. Attempting 32-bit color at 600x480 generally resulted in onscreen colour corruption, and 2D resolution peaked at 1280x1024. If gaming functioning was mediocre, the full screen MPEG playback power at least went some way in balancing the characteristic set.

The operation race was over before it had started, with the 3Dfx Voodoo Graphics finer annihilating all competition.

ATI reworked the chip, and in September the Rage II launched. Information technology rectified the D3DX issues of the first chip in addition to adding MPEG2 playback support. Initial cards, however, still shipped with 2MB of retentiveness, hampering functioning and having bug with perspective/geometry transform, As the serial was expanded to include the Rage 2+DVD and 3D Xpression+, memory capacity options grew to 8MB.

While ATI was get-go to market with a 3D graphics solution, it didn't accept too long for other competitors with differing ideas of 3D implementation to make it on the scene. Namely, 3dfx, Rendition, and VideoLogic.

In the race to release new products into the marketplace, 3Dfx Interactive won over Rendition and VideoLogic. The functioning race, however, was over before it had started, with the 3Dfx Voodoo Graphics effectively annihilating all competition.

This is the showtime article on our History of the GPU serial. If yous enjoyed this, proceed reading every bit we accept a stroll downwardly retention lane to the heyday of 3Dfx, Rendition, Matrox and young visitor called Nvidia.

Source: https://www.techspot.com/news/52069-history-modern-graphics-processor-part-1.html

Posted by: gaulkesumbing1949.blogspot.com

0 Response to "The History of the Modern Graphics Processor"

Post a Comment